RAI Newsletter - Research Highlights Issue, February 2023

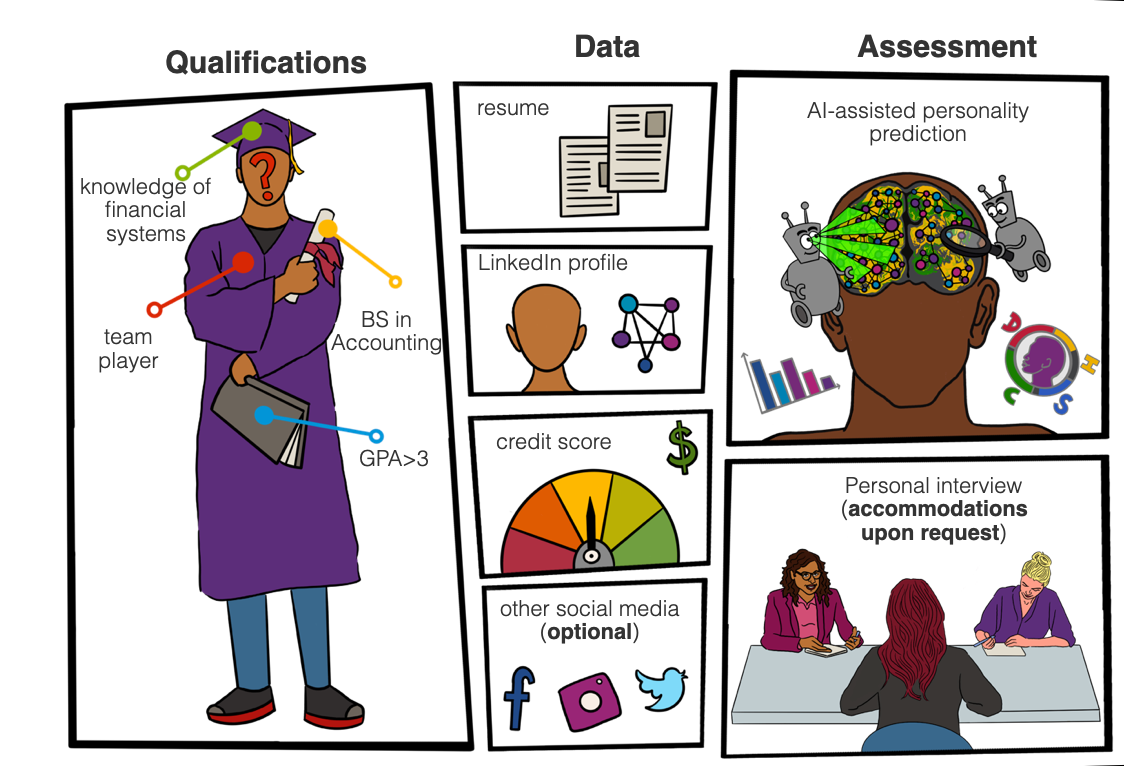

New York City’s Local Law 144 is not only about bias in hiring!

Job seekers and employees have the right to know what qualifications and characteristics the tools are screening for and picking up! We need nutritional labels for job postings and decisions! Read Julia Stoyanovich’s testimony before the New York City Department of Consumer and Worker Protection on January 23. Julia spoke at the virtual hearing from her Responsible Data Science classroom.

What we’ve been up to:

Responsible AI Research Program – Fall 2022 Project Showcase.

As part of a continuing effort led by R/AI to support Ukrainian students, 13 research fellows from the Ukrainian Catholic University and other universities in Ukraine collaborated with faculty and graduate students from New York University, the University of Washington, and the University of Edinburgh on 6 research projects on Responsible AI through the Fall 2022 semester. Watch the Fall 2022 project showcase and stay tuned for future updates from this program!

The RAI Rockstar Series welcomed its first speaker, Sihem Amer-Yahia

The RAI Rockstar series aims to improve the representation of women and members of historically under-represented groups in AI careers. Our first speaker, Prof. Sihem Amer-Yahia, is a Silver Medal CNRS Research Director and Deputy Director of the Lab of Informatics of Grenoble. She works on exploratory data analysis and fairness in job marketplaces.

During her visit in November 2022, Sihem delivered two insightful talks on AI-Powered Data-Informed Educational Platforms and the Commodification of Data Exploration.

She also engaged with the NYU community through a fireside chat and shared her experience as a woman, a scientist, an engineer, and a person of diverse heritage in the field of computer science and data science research.

What we’re looking forward to:

Moshe Vardi, the second RAI Rockstar to visit NYU, arrives this Friday!

The RAI Rockstar series continues with a visit by Moshe Vardi, University Professor and George Distinguished Service Professor in Computational Engineering at Rice University. Moshe is the recipient of several awards, including the ACM SIGACT Goedel Prize, the ACM Kanellakis Award, the IEEE Computer Society Goode Award, and the EATCS Distinguished Achievements Award. He is a Senior Editor of Communications of the ACM, the premier publication in computing. The talk “How to be an Ethical Computer Scientist,” will be held on at 11am on Friday, February 10, 2023, at 60 5th Avenue, Room 150, New York, NY 10011

What we have been working on:

Counterfactuals for the Future

Lucius E.J. Bynum, Joshua R. Loftus, and Julia Stoyanovich

In 2023 Proceedings of the AAAI Conference on Artificial Intelligence (AAAI’23)

Counterfactuals are often described as ‘retrospective’ because they focus on hypothetical alternatives to a realized past. In this work, we study when counterfactuals can instead inform us about the future. This can happen when the exogenous noise terms of each unit exhibit enough unit-specific structure or stability. We introduce “counterfactual treatment choice,” a type of treatment choice problem that motivates the use of forward-looking counterfactuals. We then explore how mismatches between interventional reasoning (the traditional approach) versus counterfactual reasoning during treatment choice can lead decision-makers to counterintuitive results.

Read the full paper, and come see us at the Sunday poster session (6:15-8:15 PM, February 12th) or virtually at AAAI 2023.

Counterfactual Fairness is Basically Demographic Parity

Lucas Rosenblatt, Teal Witter

In 2023 Proceedings of the AAAI Conference on Artificial Intelligence (AAAI’23)

Making fair decisions is crucial to ethically implementing machine learning algorithms in social settings. In this paper, we looked at the celebrated definition of counterfactual fairness [Kusner et. al 2017], and showed analytically that in many settings, it is basically equivalent statistical/demographic parity, which is a far simpler fairness constraint. We validated this finding experimentally and noted some other limitations to certain algorithms that try to satisfy counterfactual fairness. Our work is motivated by a belief that transparency and simplicity in algorithmic fairness lead to more trustworthy algorithms.

Read the full paper, and come see us at the Saturday poster session (6:15-8:15 PM, February 11th) or virtually at AAAI 2023.

Events and Press Coverage

Members of R/AI spoke in the press about our recent research results and the recent developments in AI regulation.

NYU Libraries presented the inaugural exhibition of projects created by This Is Not A Drill faculty and student fellows that grappled with the climate crisis, including issues such as rising sea levels, nuclear waste, biodiversity, and alternative energy. The exhibition ran from September 28, 2022 to December 4, 2022. “Artists approach problems differently. The show is a critique of current hierarchical thinking about ecological problems and a way of developing new and more radical ways to think about technology, equity, and climate,” said Mona Sloane, Senior Research Scientist at NYU RAI.

Mona and Julia spoke with William Tincup, on the Recruiting Daily Podcast, about the use of AI-based personality prediction tools in hiring. “There’s a lot of skepticism about whether personality is a valid construct, and, furthermore, whether you can construct it with the help of testing, especially to a sufficient degree, to then somehow make a connection between someone’s personality and whether or not they would do well on a job for which they’re being interviewed, right?” said Julia.

To kickstart the new year with a bang, Mona spoke about how AI impacts individual digital privacy and civic space on Analytics Insight. “Entities that build and deploy AI often have a vested interest in avoiding sharing the assumptions that underlie a model, as well as the data it was based on and the code that encodes it. To function sporadically well, AI systems often require enormous volumes of data. The procedures used to obtain extractive data can violate privacy. Data will always reflect historical injustices and disparities since it is historically based. Therefore, using it as the foundation for deciding what should occur in the future strengthens existing injustices.”

Mona spoke about how recruiters use AI to find and assess candidates at the International Labour Organization in Geneva. The seminar covered recent research on how employers can feel empowered to use AI responsibly so they can build a diverse, qualified workforce at scale.