R/AI Newsletter - Research Highlights Issue, April 2023

Introducing Contextual Transparency for Automated Decision Systems

LinkedIn Recruiter — a search tool used by professional job recruiters to find candidates for open positions — would function better if recruiters knew exactly how LinkedIn generates its search query responses. This is what the concept of “contextual transparency” focuses on. In our new paper “Contextual transparency for automated decision systems” (Nature Machine Intelligence, March 2023), NYU R/AI’s Mona Sloane and Julia Stoyanovich collaborated with R/AI alum Ian René Solano-Kamaiko, and Jun Yuan and Aritra Dasgupta from NJIT, on a new method for combining social science, engineering, and design to automate the creation of “nutritional labels” for Automated Decision System (ADS). The label lays bare the explicit and hidden criteria — the ingredients and the recipe — within the algorithms or other technological processes the ADS uses in specific situations, such as candidate sourcing. Contextual transparency can be used to respond to AI transparency requirements that are mandated in new and forthcoming AI regulation in the US and Europe, such as the NYC Local Law 144 of 2021 or the EU AI Act.

Read more here, find the Nature Machine Intelligence paper here.

What we’re looking forward to:

The Algorithmic Transparency Playbook: A Stakeholder-first Approach to Creating Transparency in Your Organization’s AlgorithmsThe Algorithmic Transparency Playbook: A Stakeholder-first Approach to Creating Transparency in Your Organization’s Algorithms

Andrew Bell, Oded Nov, Julia Stoyanovich Course at the 2023 Computer-Human Interaction Conference (CHI’23)

Do you believe in transparency, accountability, and openness for algorithmic systems, like those you hear about in the news, a la ChatGPT?

The NYU Center for Responsible AI will be holding a critically important course at the CHI 2023 conference titled “The Algorithmic Transparency Playbook: A Stakeholder-first Approach for Creating Transparency for Your Organization’s Algorithms!” Those who participate will learn everything they need to know about algorithmic transparency, and how one can help influence change within their organization towards having more open, and accountable systems. The course also includes a case study game where participants will get to explore the tension between different key stakeholders vying for and against algorithmic transparency! This course is based on a paper, published by NYU R/AI’s Andrew Bell and Julia Stoyanovich, in collaboration with Oded Nov (Data & Policy, March 2023).

If you are attending the CHI 2023 conference and are interested in registering for the course, visit this page (note that the course is labeled as Course 31).

Check out the course website, find the playbook, and the full paper.

What we have been looking forward to:

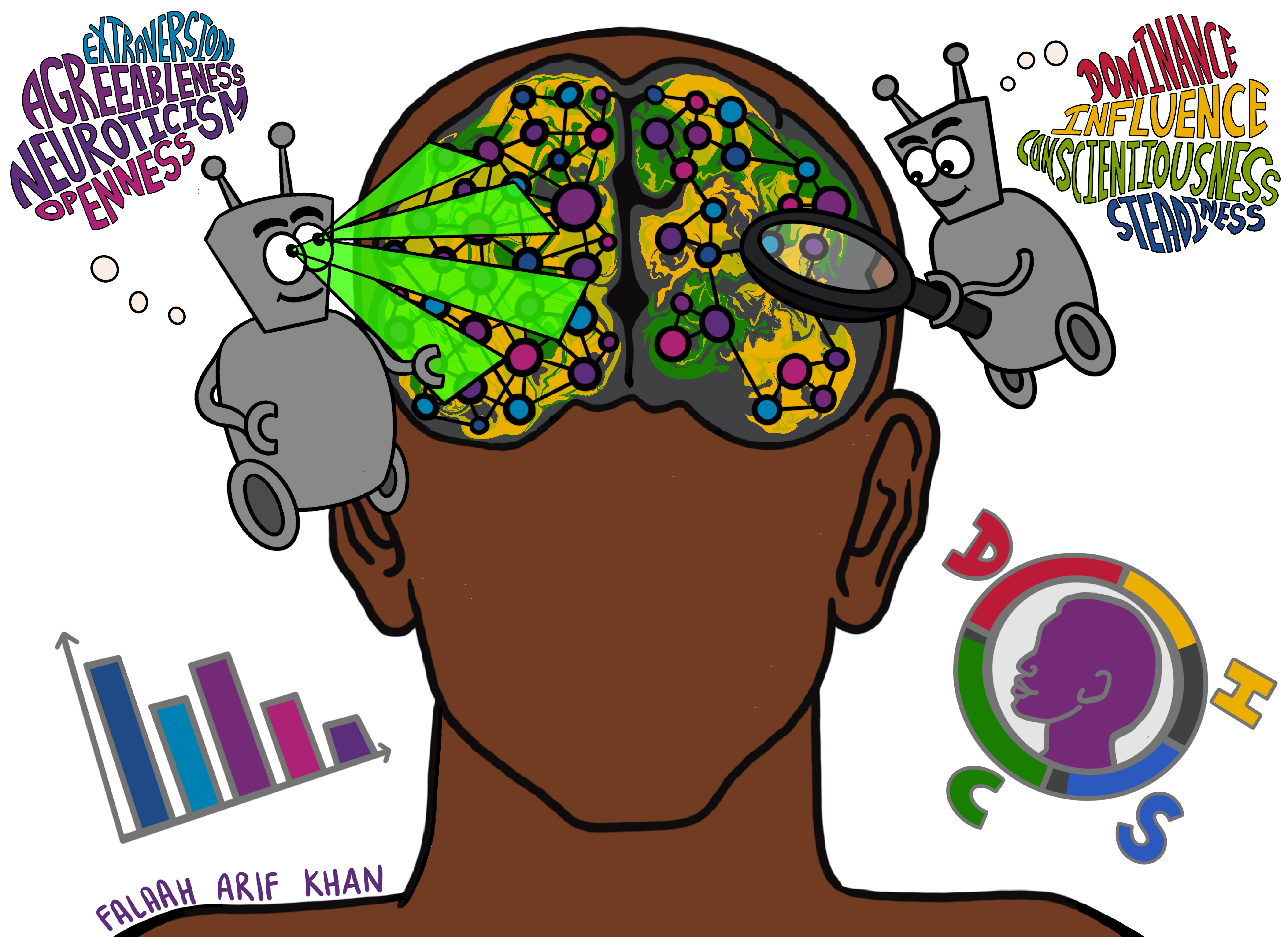

Resume Format, LinkedIn URLs and Other Unexpected Influences on AI Personality Prediction in Hiring: Results of an Audit

Alene Rhea, Kelsey Markey, Lauren D’Arinzo, Hilke Schellmann, Mona Sloane, Paul Squires, and Julia Stoyanovich. In 2022 AAAI/ACM Conference on AI, Ethics, and Society (AIES ‘22).

Algorithmic personality tests are in broad use in hiring today, but do they work? We sought to answer this question by interrogating the validity of algorithmic personality tests that claim to estimate a job seeker’s personality based on their resume or social media profile. We developed a methodology for auditing the stability of predictions made by these tests. Crucially, we framed our methodology around testing the assumptions made by the vendors of these tools. We used this methodology to conduct an external audit of two commercial systems, Humantic AI and Crystal, over a dataset of job applicant profiles collected through an IRB-approved study. The key take-away is that both systems show instability on key facets of measurement, and so cannot be considered valid testing instruments for pre-hire assessment.

Read more about our audit, and watch a 15-minute video for a summary of our methods and findings. And take a look at press coverage of these results in Forbes and HR Drive.

Fairness in Ranking: From Values to Technical Choices and Back

Julia Stoyanovich, Meike Zehlike and Ke Yang Tutorial at 2023 The Web Conference (WWW’23)

In the past few years, there has been much work on incorporating fairness requirements into the design of algorithmic rankers, with contributions from the data management, algorithms, information retrieval, and recommender systems communities.

Julia Stoyanovich, NYU R/AI alum Ke Yang, and Meike Zehlike, will give a tutorial on Fairness in Ranking at The Web Conference (WWW’23). This tutorial, based on a recent two-part survey that appeared in ACM Computing Surveys (part 1, part 2) offers a broad perspective that connects formalizations and algorithmic approaches to fair ranking across subfields. During the introductory part of the tutorial, we will present a classification framework for fairness-enhancing interventions, along which we will then relate the technical methods. Next, during the main part of the tutorial, we will discuss fairness in score-based ranking and in supervised learning-to-rank. We will conclude with recommendations for practitioners, to help them select a fair ranking method based on the requirements of their specific application domain.

Automated Data Cleaning Can Hurt Fairness in ML-based Decision Making

Shubha Guha, Falaah Arif Khan, Julia Stoyanovich, Sebastian Schelter In Proceedings of the 39th IEEE International Conference on Data Engineering (ICDE’23)

In this work, NYU R/AI’s Julia Stoyanovich and Falaah Arif Khan collaborated with Shubha Guha and Sebastian Schelter from the University of Amsterdam. We interrogate whether data quality issues track demographic characteristics such as sex, race and age, and whether automated data cleaning — of the kind commonly used in production ML systems — impacts the fairness of predictions made by these systems. We find that the incidence of data errors, specifically missing values, outliers and mislabels, does not (significantly) track demographic characteristics, but the downstream impact of automated cleaning is more likely to be negative from a fairness perspective (increases disparity in predictive performance) than positive. This finding is both significant and worrying, given that it potentially implicates many production ML systems, and is an indication that we need to envision new data cleaning methods — ones that are fairness-aware.

Join the “Special Track Paper Session 2” at 4pm PDT on April 6th to see the oral presentation and stay for Q/A!

All Aboard! Making AI Education Accessible

Falaah Arif Khan, Lucius Bynum, Amy Hurst, Lucas Rosenblatt, Meghana Shanbhogue, Mona Sloane, Julia Stoyanovich

Virtual launch event in collaboration with the New York Public Library

The All Aboard! Primer is the outcome of three roundtables that R/AI hosted between AI and social science scholars and disability experts and activists. The Primer makes concrete recommendations for making public AI education more accessible and provides:

• Best practices and guidelines for making text-based and visual educational content accessible. • A case study that illustrates how comics can be used to accessibly communicate AI concepts to the general public. • Pointers to free resources you can use to improve the accessibility of educational content you are developing. • Join us for a discussion on how we can all make a difference in making public AI education more accessible!

What we have been up to:

Responsible AI Rockstar series: Moshe Vardi visits NYU!

Lucius E.J. Bynum, Falaah Arif Khan, Oleksandra Konopatska, Joshua R. Loftus, and Julia Stoyanovich

The RAI Rockstar series continued with a visit by Moshe Vardi, University Professor and George Distinguished Service Professor in Computational Engineering at Rice University. Moshe is the recipient of several awards, including the ACM SIGACT Goedel Prize, the ACM Kanellakis Award, the IEEE Computer Society Goode Award, and the EATCS Distinguished Achievements Award. He is a Senior Editor of Communications of the ACM, the premier publication in computing.

The talk “How to be an Ethical Computer Scientist,” was held at 11am on Friday, February 10, 2023, at the NYU Center for Data Science.

Events and Press Coverage

Members of R/AI spoke about our research results and recent developments in AI regulation.

Julia Stoyanovich and Stefaan Verhulst co-moderated the Global Perspectives on AI Ethics Panel #12.

“An A.I. Start-Up Boomed, but Now It Faces a Slowing Economy and New Rules,” an article in the New York Times discusses how AI is transforming the hiring process for companies. “We need to be very careful about how we use these technologies, especially in the hiring process,” said Julia.

In an article in the Time Magazine, “The Humane Response to the Robots Taking Over Our World,” Julia expressed her concern about ChatGPT. She cautioned that the goal of many programs “is not to generate new text that is accurate. Or morally justifiable. It’s to sound like a human.” And so it’s entirely appropriate to treat them that way and call them out, Julia suggests, for what they sometimes are: “Bullshitters.”

In an article Bloomberg Law, “Workplace AI Vendors, Employers Rush to Set Bias Auditing Bar,” Mona Sloane spoke about the lack of AI audit standards. “There is a race going on for setting the standard by way of doing it rather than waiting for a government agency to say, ‘This is what an audit of hiring AI should look like’.”

Mona Sloane co-authored the article “The shake-up of the tech sector shows: we must learn from finance regulation.” The authors call for collaboration between the tech industry and regulators to create effective and efficient regulations.

Julia spoke at a conference, “Women Designing the Future: Artificial Intelligence/Real Human Lives” hosted by NJIT. Watch the full recording on YouTube or read the story.